- 12/8/2025: A collaborative paper entitled "The Role of Artificial Intelligence in Deep Brain Stimulation" published in Seminars in Neurology [Paper]

- 12/3/2025: Dr. Fang gave a talk on "Intelligence in Nature, Insight in Machines" at Center for Machine Learning, Harvard University.

- 11/20/2025: We will organize a workshop "Medical Video AI Assessment and Uncertainty Quantification: Bridging Research and Practice," at the IEEE International Symposium on Biomedical Imaging (ISBI) 2026 with Dr. Feng Yang, Dr. Yan Zhang, and Dr. Qian Cao from FDA, and Dr. Maria A. Zuluaga from Eurocom.

- 10/27/2025: Our paper entitled "Integrating Retinal Fundus Imaging and Computable Phenotypes for Subphenotype Discovery and LLM-Assisted Early and Late Onset Alzheimer's Diagnosis" is accepted by SPIE Medical Imaging 2026 [Paper]

- 10/24/2025: A collaborative paper entitled "Revealing Neurocognitive and Behavioral Patterns by Self-Supervised Manifold Learning from Dynamic Brain Data" is published in Nature Computational Science [Paper]

- 09/18/2025: Congratulations to SMILE

Lab student Chintan Acharya on the publication of his

first-author paper, "TRACE: Applying AI Language Models to

Extract Ancestry Information from Curated Biomedical Literature

[Paper]"

- 07/11/2025: Dr. Fang is invited to

give a talk at the Athinoula A. Martinos Center for Biomedical

Imaging, Massachusetts General Hospital (MGH) & HMS & MIT on

“New Vision into Brain Health through Multimodal Digital Twins

and Medical Foundation Models”.

- 07/03/2025: Our collaborative

proposal entitled "A Effects of Cannabis Constituents on the

Reinforcing Properties of Fentanyl in Rats: A Behavioral and

Neurobiological Approach" is funded by 2025 MMJ Clinical

Outcomes Research.

- 06/27/2025: Our paper entitled

"Population-Level Predictive Variation in Machine Learning

Diagnosis of Symptomatic Bacterial Vaginosis" is accepted by npj

Women Health!

- 06/25/2025: One Paper accepted by

ICCV 2025.

- 06/17/2025: One Paper accepted by

MICCAI 2025.

- 06/16/2025: Dr. Fang is ranked among

the Top 100 (top 0.4%) researchers worldwide in image analysis,

according to ScholarGPS.

- 06/06/2025: Dr. Fang gave an invited

talk at the Harvard Medical School (HMS) and Massachusetts

General Brigham (MGB).

- 06/01/2025: Dr. Fang is visiting

Harvard University, Harvard Medical School (HMS), and

Massachusetts Medical Brigham (MGB) as a Visiting Associate

Professor.

- 04/21/2025: Congratulations to SMILE

Lab Student Gavin Hart for winning second place for best poster

at the CAM Research Day.

- 04/16/2024: Dr. Fang started her

sabbaatical visit at the University of Tokyo and RIKEN as a

Visiting Associate Professor.

- 03/17/2025: Collaborative paper

entitled "Automated Imaging Differentiation for Parkinsonism"

published in JAMA Neurology [News]

[Paper]

- 02/26/2025: Congratulations to SMILE

Lab Ph.D. Student Skylar Stolte on winning the Association for

Academic Women’s (AAW) Emerging Scholar Award! [News]

-

02/21/2025: Dr. Fang gave a talk at Stanford University,

Stanford Science Fellow Program, titled "Strategic Career

Development for the Modern Scientists".

- 02/20/2025: Dr. Fang receives the

Herbert Wertheim College of Engineering Doctoral Dissertation Advisor/Mentoring Award

[News]

-

02/11/2025: Dr. Fang gave a talk at Stanford University,

Department of Radiology titled "A Tale of the Brain and AI:

Multimodal Digital Twins and 3D Vision Foundation Model"

-

02/07/2025: Dr. Fang gave a talk at Stanford University,

Stanford Science Fellow Program, titled "Peek into the Brain

through AI: Multimodal Digital Twins and 3D Vision Foundation

Models"

-

01/23/2025: Dr. Fang gave a talk at Stanford University,

Department of Radiation Oncology, titled "Unlocking Brain Health

through Multimodal Digital Twins and 3D Vision Foundation

Models"

- 01/23/2025: Dr. Fang gave a talk

at a virtual seminar at the UC Berkeley-UC San Francisco

Computational Precision Health (CPH) titled "Unlocking Brain

Health through Multimodal Digital Twins and 3D Vision Foundation

Models".

- 01/18/2025: Dr. Fang will serve as an

Associate Editor for the IEEE EMBS Conference Editorial Board

(CEB) for the 47th Annual International Conference of the IEEE

Engineering in Medicine and Biology Society (EMBC) , July 14-18,

2025, Copenhagen, Denmark.

- 01/16/2025: Dr. Fang will serve on

the Program Committee and as the Area Chair of MICCAI

2025.

- 01/12/2025: One paper accepted by

IEEE Review in Biomedical Engineering (RBME) entitled "A

Comprehensive Survey of Foundation Models in Medicine"! Kudos to

Wasif! [News]

- 01/07/2025: Congratulations to SMILE

Lab PhD candidate Chaoyue Sun on receiving the recognition of

International Student Achievement Awards Certificate of

Outstanding Merit from the International Center.

- 12/06/2024: Dr. Fang receives the UF

BME Departmental Faculty Teaching Excellence Award. [News]

- 12/06/2024: Congratulations to SMILE

Lab Ph.D Student Skylar Stolte on receiving the UF BME

Departmental Graduate Student Excellence Award! [News]

- 12/06/2024: Congratulations to SMILE

Lab Ph.D Joesph Cox on receiving the UF BME Departmental

Excellence in Supervised Teaching Award! [News]

- 11/11/2024: Congratulations to SMILE

Lab Ph.D. Student Skylar Stolte on being awarded the CAM

Dissertation Award in the amount of $2,500!

- 11/08/2024: Congratulations to SMILE

Lab PhD candidate Seowung Leem on receiving the recognition of

International Student Achievement Awards Certificate of

Outstanding Merit from the International Center. [News]

- 11/04/2024: 2 papers are accepted by

the 6th International Brain Stimulation Conference. Congrats to

Skylar Stolte and Junfu Cheng!

- 11/01/2024: Dr. Fang started her

sabbaatical visit at Stanford University as a Visiting Associate

Professor.

- 10/29/2024: Dr. Fang presents at the

UF AI Day 2024 on AI Winners Panel discussing AI across

curriculum.

- 10/25/2024: SMILE Lab students Daniel

Rodriguez, Junfu Cheng, and Skylar Stolte presented their poster

on "Baseline Clinical Characteristics and Machine Learning

Predict DCS Treatment Outcomes for Anxiety" at BMES 2024.

- 10/24/2024: SMILE Lab students Jason

Chen, Veronica Ramos, and Skylar Stolte presented their poster

on "Comparison of GRACE and SynthSeg Deep Learning Models for

Whole-Head Brain Segmentation" at BMES 2024.

- 10/01/2024: Dr. Fang received the

Stanford Science Visiting Professorship (SSVP) from the School

of Humanities and Sciences of Stanford University.

- 01/15/2024: Dr. Fang and Skylar

Stolte's contributed publication, with collaborators, entitled

"Artificial Intelligence-Optimized Non-Invasive Brain

Stimulation and Treatment Response Prediction for Major

Depression" has been published in Bioelectronic Medicine.

- 09/24/2024: Congrats to SMILE Lab

Ph.D. student Seowung Leem on winning the Best Poster Award at

Graduate Pruitt Research and Alumni Engagement Day!

- 09/06/2024: Dr. Fang's Insights

Featured in National Academy of Science, Engineering, and

Medicine Publication on AI and Neuroscience! [Link]

- 09/06/2024: Congratulations to SMILE

Lab Ph.D. Student Skylar Stolte for Winning the $1,000 CAM

(Center for Cognitive Aging and Memory) Student Travel Award!

- 08/26/2024: Congratulations to SMILE

Lab Ph.D. Alumna Yao Xiao on starting a new position as an

Assistant Professor at the Mayo Clinic.

- 08/08/2024: Congrats to SMILE Lab

Ph.D. student Joseph Cox on the acceptance of his first-author

paper, "BrainSegFounder: Towards 3D Foundation Models for

Neuroimage Segmentation," Link to the Medical Image Analysis

Journal! [Link]

- 07/26/2024: Congrats to SMILE Lab

Ph.D. student Skylar Stolte on receiving the prestigious NIH F31

Ruth L. Kirschstein Predoctoral Individual National Research

Service Award!

- 06/17/2024: Dr. Fang has been

selected to participate in the 2024-2025 class of the University

of Florida Leadership Academy.

- 06/08/2024: Congratulations to SMILE

Lab Alumna Gianna Sweeting getting accepted in Meharry Medical

College's MD program!

- 05/14/2024: Dr. Fang gave an invited

talk at the University of Tokyo and RIKEN, Japan on

neuroscience-inspired AI and AI for brain health, hosted by Dr.

Tatsuya Harada and Dr. Lin Gu.

- 04/29/2024: Kudos to SMILE Lab PhD

candidate Seowung Leem on his paper being accepted by IEEE EMBC

as an oral presentation!

- 04/18/2024: Congratulations to SMILE

Lab PhD student Seowung Leem on receiving the IEEE EMBC NextGen

Scholar Award from IEEE Engineering in Medicine and Biology

Society (EMBS), 2024!

- 09/01/2024: Congratulations to SMILE

Lab Ph.D. Alumna Yao Xiao on starting a new position as an

Assistant Professor at the Mayo Clinic.

- 04/08/2024: Congratulations to Skylar

Stolte on being selected as 2023-2024 Attributes of a Gator

Engineer Award Winners in Creativity!

- 04/03/2024: Congratulations to SMILE

Lab PhD student Joseph Cox on being selected as NIAAA T32

Fellow! [News]

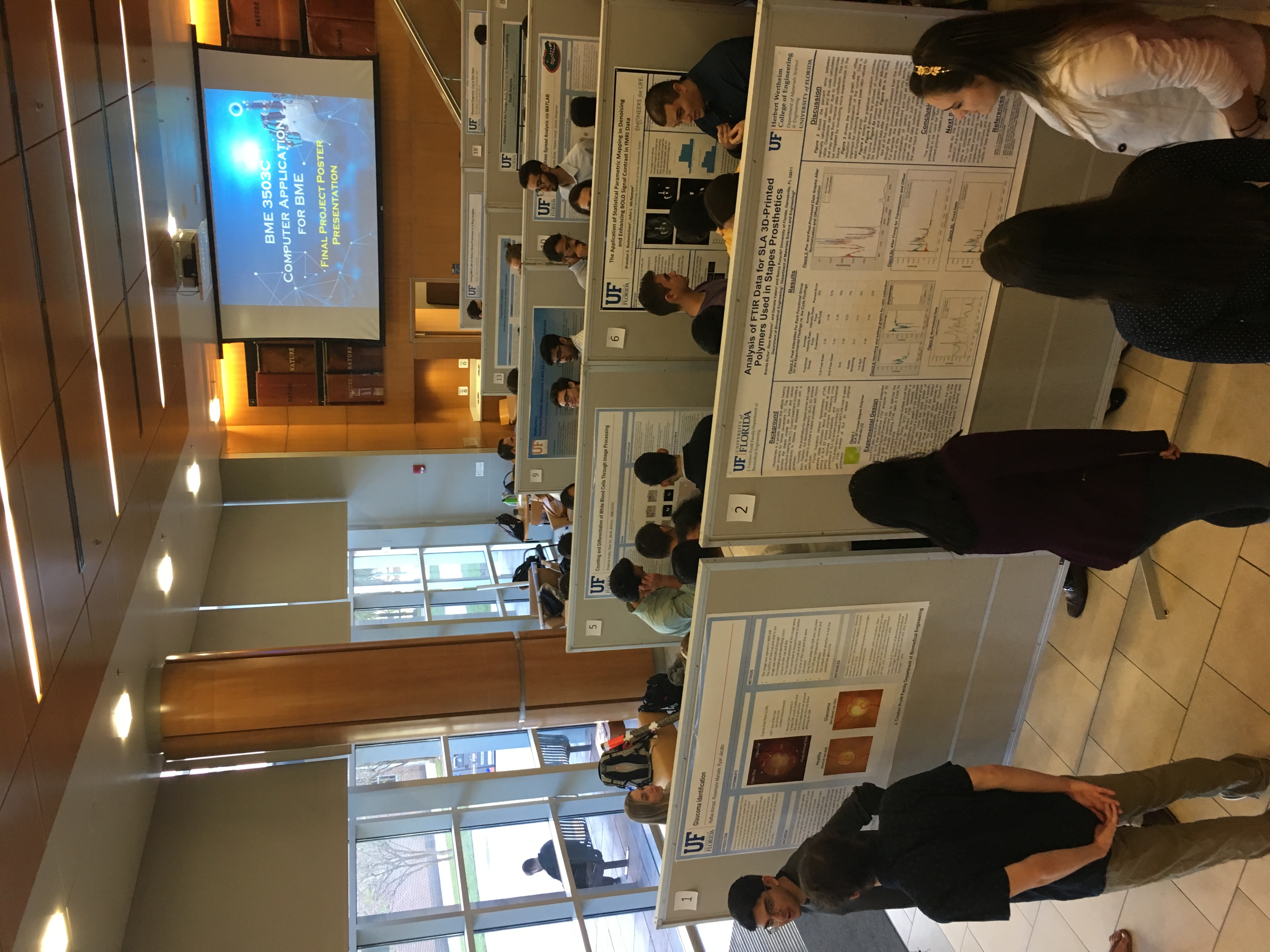

- 04/03/2024: Dr. Fang has been awarded

Inaugural "AI Course Award". This award was created by the AI2

Center, the Center for Teaching Excellence (CTE), and the Center

for Instructional Technology & Training (CITT) to honor UF

instructors who have developed high-quality AI courses. She will

be recognized at the Interface Conference on April 17, 2024. [News]

- 04/02/2024: New paper entitled

"DeepDynaForecast: Phylogenetic-informed graph deep learning for

epidemic transmission dynamic prediction" has been accepted by

Plos Computational Biology! Congrats to Chaoyue! [Paper]

- 03/27/2024: Congratulations to SMILE

Lab PhD student Skylar Stolte on winning the Cluff Aging

Research Award!

- 03/26/2024: New paper entitled

"Neuron-Level Explainable AI for Alzheimer’s Disease Assessment

from Fundus Images" is accepted by Nature Scientific Reports!

[Link]

- 03/21/2024: Congratulations to SMILE

Lab undergraduates Amy Lazarte and Jason Chen on being selected

as AI Scholars at the University Scholar Program (USP)!

- 03/04/2024: Dr. Fang is invited to

give a talk at the National Academy of Science (NAS)'s workshop

titled Exploring the Bidirectional Relationship between

Artificial Intelligence and Neuroscience, March 25-26, 2024 in

Washington DC. [News]

- 02/28/2024: Dr. Fang is named to the

Class of 2024 Senior Members by National Academy of Inventors

(NAI)! [News]

- 02/27/2024: Congrats to SMILE Lab PhD

student Chaoyue Sun on winning the Best Paper Award at HEALTHINF 2024

Conference!

- 02/24/2024: SMILE Lab has a third

paper accepted in 2024 in just two months entitled "Emergence of

Emotion Selectivity in Deep Neural Networks Trained to Recognize

Visual Objects" by PLOS Computational Biology! Kudo to SMILE Lab

postdoc associate Dr. Peng Liu! [Link]

- 02/10/2024: SMILE Lab has a new paper

entitled "Deep Learning Predicts Prevalent and Incident

Parkinson’s Disease From UK Biobank Fundus Imaging" accepted by

Nature Scientific Reports!

- 01/08/2024: Congrats to Skylar Stolte

on her first author journal paper entitled "Precise and Rapid

Whole-Head Segmentation from Magnetic Resonance Images of Older

Adults using Deep Learning" being accepted by Imaging

Neuroscience!

- 12/20/2023: A new paper is accepted

by Journal of Visual Communication and Image Representation!

- 12/01/2023: Welcome Diandra Ojo to

join SMILE Lab as a Postdoc Associate!

- 11/17/2023: A new published in Nature

Portfolio Journal npj Digital Medicine on bias and diaparity of

AI in diagosing women's health issue. [Paper] [News]

- 10/24/2023: Dr. Fang gave an invited

talk at the 3rd Annual AI Symposium at UF Health Cancer Center:

Insights in Cancer Imaging on "AI for Medical Image Analysis

101". [Link]

- 10/12/2023: Dr. Fang co-host with Dr.

Parisa Rashidi a Special Session on "AI in Biomedical

Engineering" at BMES 2023. Department chairs from University of

Washington, Cornell, University of Florida, Boston University,

Clemson University were invited to share thoughts on this panel

discussion.

- 10/11/2023: Congratulations to SMILE

Lab PhD Candidate Skylar Stolte on placing 2nd in the Women in

MICCAI (WiM) Inspirational Leadership Legacy (WILL) Initiative

competition! [Link]

- 10/09/2023: Dr. Fang is selected as

Rising Stars (Engineering) to be recognized at the Academy of

Science, Engineering, and Medicine of Florida (ASEMFL) Annual

Meeting of November 3 & 4, 2023. [News][College News]

- 10/09/2023: Dr. Fang becomes a MICCAI

2023 Mentor!

- 10/08/2023: Dr. Fang presented at the

Pre-MICCAI conference as Keynote Speaker in the University of

British Columbia (UBC), Vancouver, Canada.

- 09/01/2023: Welcome Peng Liu to join

SMILE Lab as a Postdoc Associate!

- 09/01/2023: Congratulations to our

SMILE Lab Ph.D. student Daniel Rodriguez being selected to

receive NIH T1D&BME T32 Fellowship! [News]

- 08/18/2023: A new collaborative grant

(DRPD-ROF2023) entitled "Learning optimal treatment strategies

for hypotension in critical care patients with acute kidney

injury using artificial intelligence" has been funded by the

University of Florida!

- 08/09/2023: Dr. Fang receives a new

NSF award on brain-inspired AI entitled "NCS-FO: Brain-Informed

Goal-Oriented and Bidirectional Deep Emotion Inference" as the

PI, with Co-PI Dr. git gzhou and Andreas Keil. [News] [NSF Link]

- 08/07/2023: Dr. Fang gave a talk at

the MRI Research Institute (MRIRI), Weill Cornell Medical

College, Cornell University on "Generative, Trustworthy, and

Precision AI in Radiology".

- 08/01/2023: Dr. Fang will serve as

the President of Women in MICCAI, International Society of

Medical Image Computing and Computer Assisted Intervention

(MICCAI). [News]

- 07/31/2023: Two papers from SMILE Lab

PhD students Joseph Cox and Seowung Leem will be presented at BMES 2023 in Seattle, WA!

- 06/26/2023: One paper entitled

"DOMINO++: Domain-aware Loss Regularization for Deep Learning

Generalizability" is accepted by MICCAI 2023 in Vancouver,

Canada. Kudos to Skylar!

- 05/23/2023: New paper entitled

"Machine-Learning Defined Precision tDCS for Improving Cognitive

Function" is accepted by Brain Stimulation!

- 05/17/2023: Editorial on "Frontiers

of Women in Brain Imaging and Brain Stimulation" authored by Dr.

Fang is published in the Frontiers of Human Neuroscience.

- 05/16/2023: Dr. Fang gave an invited

talk at the Nvidia Artificial Intelligence Technology

Center (NVAITC) on "Trustworthy AI and Large Vision

Models for Neuroimages".

- 04/25/2023: Dr. Fang will serve as a

mentor for Dr. Joseph Gullett who is awarded an NIH/NIA K23 on

"Using Artificial Intelligence to Predict Cognitive Training

Response in Amnestic Mild Cognitive Impairment".

- 04/25/2023: A new collaborative R01

award entitled "Cognitively engaging walking exercise and

neuromodulation to enhance brain function in older adults" is

funded by NIH/NIA!

- 04/19/2023: SMILE Lab PhD student

Joseph Cox taught the AI Bootcamp of AI4Health

Conference in Orlando.

- 04/10/2023: Congratulations to SMILE

Lab PhD Student Hong Huang on his work "Distributed Pruning

Towards Tiny Neural Networks in Federated Learning" getting

accepted by the 43rd IEEE International Conference on

Distributed Computing Systems (ICDCS 2023) (rate=18.9%)!

- 04/08/2023: Congrats to SMILE Lab

alumna and Dream Engineering Team vice president Neeva Sethi on

being one of the five inductees to the UF CLAS Hall of Fame!

- 04/07/2023: Congrats to SMILE Lab PhD

student Tianqi Liu on passing his PhD Proposal Defense!

- 04/06/2023: Dr. Fang appears in the

National Geographic story, "Your eyes may be a window into early

Alzheimer's detection"! [News]

- 04/06/2023: SMILE Lab received an

Oracle for Research Cloud Starter Award!

- 04/05/2023: SMILE Lab undergraduate

researchers Grace Cheng and Akshay Ashok have been selected to

receive UF AI Scholarship on its first offering! Congrats to

Grace and Akshay! [News]

- 03/31/2023: SMILE Lab is featured on

the Forward & Up video by the College of Engineering. [Video]

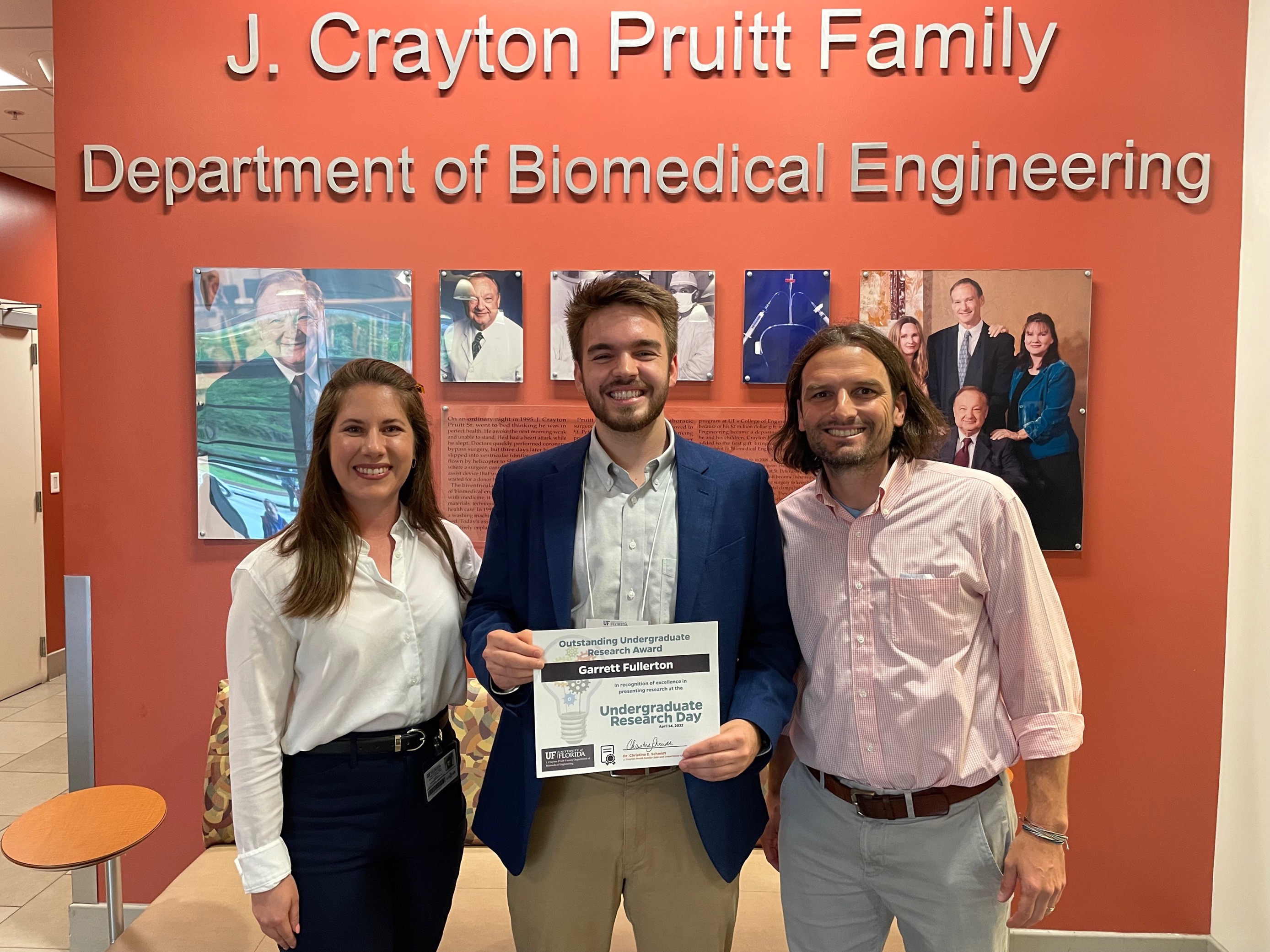

- 03/31/2023: Congratulations to SMILE

Lab Alumnus Garrett Fullerton on receiving the prestigious

National Science Foundation Graduate Research Fellowship Program

(NSF-GRFP) grant!

- 03/23/2023: Congratulations to SMILE

Lab Alumna Neeva Sethi on receiving the prestigious Presidential

Service Award at the University of Florida! [News]

- 03/06/2023: Dr. Fang gave a talk at

the Intelligence Critical Care Center (IC3) on "Artificial

Intelligence for Cognitive Aging: Novel Diagnosis and

Personalized Intervention".

- 02/14/2023: Dr. Fang and SMILE Lab's

research using HiperGator supercomputer for healthcare and

humanity is featured by ABC Action News in the story

"Supercomputer at the University of Florida is harnessing the

awesome power of AI". [News]

- 02/11/2023: Our journal paper

entitled "DOMINO: Domain-aware loss for deep learning

calibration" has been accepted by Software Impact. Kudos to

Skylar! Code and pretrained models are available on GitHub and CodeOcean [Paper]

- 01/10/2023: Dr. Fang and SMILE Lab

Ph.D. student Skylar Stolte are featured by the Medical Imaging

section of Computer Vision News on medical AI research. [Link]

- 12/09/2022: Dr. Fang recieves Pruitt

Family Endowed Faculty Fellow for 2023-2026! [News]

- 12/02/2022: Dr. Fang delivered a

keynote speech entitled "A Tale of Two Frontiers - When Brain

Meets AI" at the Neural Information Processing System

(NeurIPS) 2022 workshop on "Medical Imaging Meets

NeurIPS" in New Orleans, Louisiana.

- 11/11/2022: Dr. Fang gave an invited

talk at College of Medicine, Stanford University on "Artificial

Intelligence in Cognitive Aging and Brain-Inspired AI".

- 11/01/2022: SMILE Lab PhD Candidate

Skylar Stolte presented her work, DOMINO: Domain-aware

Calibration in Medical Image Segmentation, at the [Fall 2022 HiPerGator Symposium] [News].

- 10/25/2022: New collaborative paper

entitled "Association of Longitudinal Cognitive Decline with

Diffusion MRI in Gray Matter, Amyloid, and Tau Deposition" is

accepted by Neurobiology of Aging!

- 09/20/2022: Kudos to SMILE Lab PhD

student Skylar Stolte for her Women in MICCAI's Best Paper

Presentation Award Runner up! [News]

- 08/24/2022: Congrats to SMILE Lab PhD

Candidate Skylar Stolte on passing her dissertation

qualification!

- 08/20/2022: Welcome two new Masters

students Everett Schwieg and Fan Yang to join SMILE Lab!

- 01/06/2023: Welcome two new Ph.D.

students Chaoyue Sun and Hong Huang to join SMILE Lab!

- 08/10/2022: Congrats to SMILE Lab PhD

student Skylar Stolte on her paper selected to be oral

presentation (Oral rate=2.3%) at MICCAI 2022!

- 08/09/2022: Kudos to SMILE Lab PhD

student Seowung Leem's paper being accepted by the Annual

Meeting of Society for Neuroscience (SfN) 2022!

- 06/28/2022: Dr. Fang is awarded

Tenure and Promotion to Associate Professor! [News]

- 06/20/2022: Congratulations to SMILE

Lab PhD student Skylar Stolte on receiving MICCAI Student Travel

Award!

- 06/07/2022: Dr. Fang gave an invited

talk at the Gordan

Research Conference (GRC) Image Science, Emerging Imaging

Techniques at the Intersection of Physics and Data

Science on the topic "From Zero to One:

Physiology-Informed Deep Learning for Contrast-Free CT Perfusion

in Stroke Care" in Newry, ME, United States.

- 06/01/2022: Dr. Fang gave an invited

talk at the Gordan

Research Conference (GRC) System Aging, Systemic Processes,

Omics Approaches and Biomarkers in Aging on the topic

"Artificial Intelligence and Machine Learning for Cognitive

Aging: Novel Diagnosis and Precision Intervention" in Newry, ME,

United States.

- 05/25/2022: New paper published in

Frontiers in Radiology entitled "PIMA-CT: Physical Model-Aware

Cyclic Simulation and Denoising for Ultra-Low-Dose CT

Restoration". Congrats to recent SMILE Lab PhD graduate Peng Liu

and undergraduate graduate Garrett Fullerton! [Link]

- 05/23/2022: Welcome new Masters

student Ayesha Naikodi to join SMILE Lab!

- 05/12/2022: Welcome new Ph.D. student

Joseph Cox to join SMILE Lab!

- 05/05/2022: Our paper entitiled

"DOMINO: Domain-aware Model Calibration in Medical Image

Segmentation" is early accepted by MICCAI in

Singapore Sep. 18-22, 2022 (early acceptance rate=13%)! Congrats

to Skylar Stolte!

- 05/03/2022: Dr. Fang was interview by

ABC WCJB TV Tech Tuesday on her research using artificial

intelligence (AI) to detect Alzheimer's Disesase. [Interview]

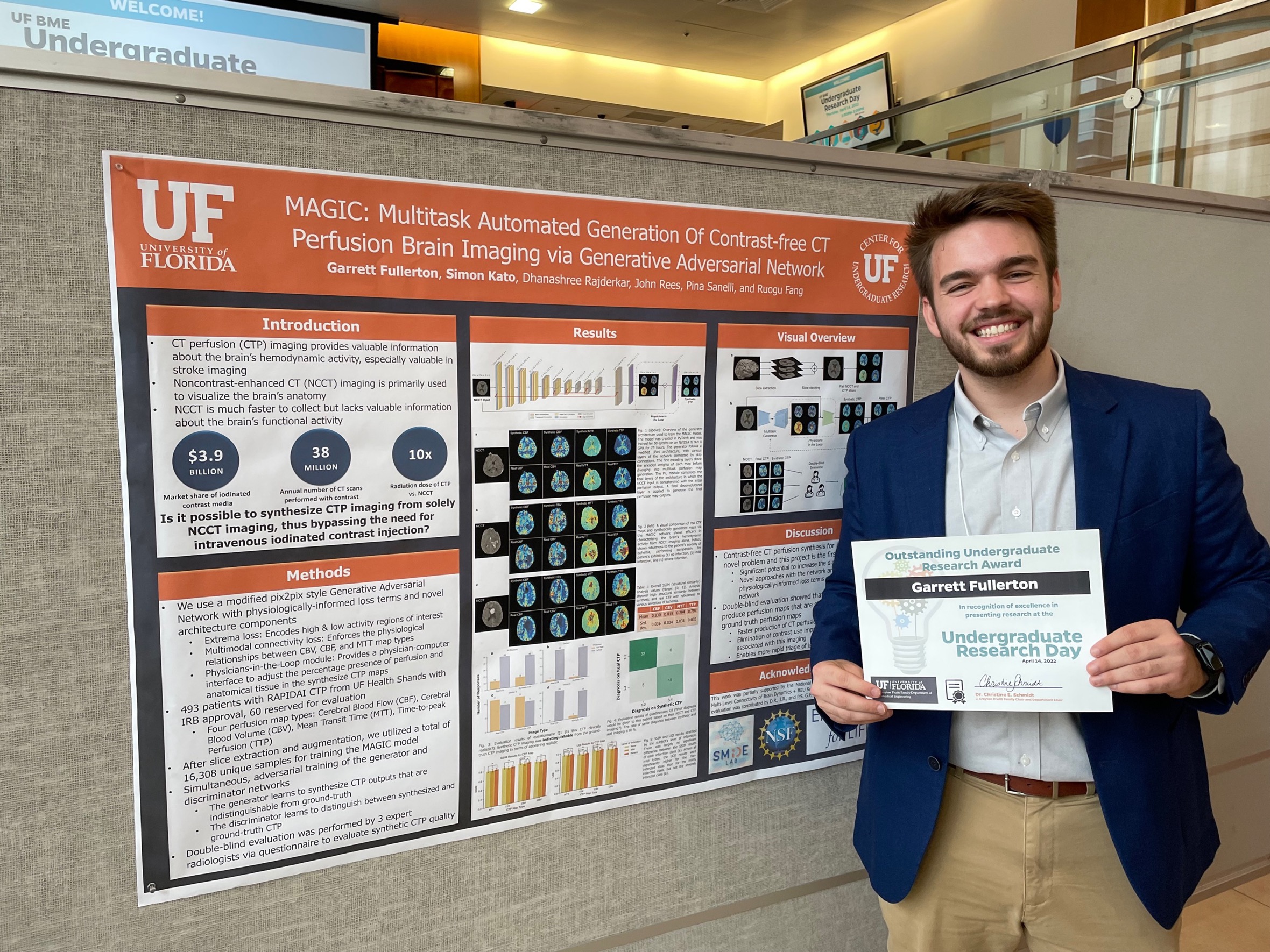

- 04/14/2022: Congratulations to SMILE

Lab member Garrett Fullerton on winning the Outstanding Research

Award at the BME Undergraduate Research Day! [News]

- 03/20/2022: Welcome new Ph.D. student

Ziqian Huang to join SMILE Lab!

- 03/11/2022: A new collaborative

$10.7M P01 entitled "Multi-Scale Evaluation and Mitigation of

Toxicities Following Internal Radionuclide Contamination" (PI:

Gayle E. Woloschak) is funded by NIH NIAID!

- 03/04/2022: Dr. Fang is selected to

receive the Herbert Wertheim College of Engineering 2022 Faculty

Award for Excellence in Innovation! [News]

- 03/01/2022: Dr. Fang is appointed as

the Associate Director in the UF Intelligent Critical Care Center (IC3).

- 02/16/2022: Dr. Fang will serve as

Topic Editor on "Women In Brain Imaging and Stimulation"

with Frontiers in Human Neuroscience Journal.

Welcome to submit to this exciting topic!

- 02/12/2022: Dr. Fang will serve as

Area Chair of the 25th International Conference of Medical Image

Computing and Computer Assisted Intervention (MICCAI)

2022 in Singapore. Call for papers for this top

conference in MIA!

- 02/02/2022: Dr. Fang and

collaborators joined forces with Nvidia scientists and

OpenACCorg to accelerate brain science at the Georgia Tech GPU

Hackathon. The StimulatedBrain AI@UF team drastically improved

their processing time for evaluating datapoints collected from

an individual brain. [News] [Twitter]

- 01/18/2022: Dr. Fang delivers an

invited talk entitled "Modular machine learning for Alzheimer's

disease classification from retinal vasculature" at University

of Oxford "Artificial Intelligence for Mental Health" seminar

series.

- 01/09/2022: Welcome new Ph.D. student

Tianqi Liu to join SMILE Lab!

- 01/07/2022: Dr. Fang is interviewed

by Ivanhoe on her collaborative work on Aritifial Intelligence

to prevent dementia. [News]

- 01/04/2022: Dr. Fang gives an invited

talk entitled "Artificial Intelligence for Cognitive Aging -

Novel Diagnosis and Precision Intervention" at the University of

Florida Institute of Aging.

- 12/15/2021: Congrats to SMILE Lab PhD

Dr. Peng Liu graduated!

- 12/14/2021: Dr. Fang receives the BME

Departmental Faculty Research Excellence Award! [News]

- 12/07/2021: A new paper on Artificial

Intelligence to predict dementia entitled "Baseline neuroimaging

predicts decline to dementia from amnestic mild cognitive

impairment" is published in Frontiers in Aging Neuroscience! [Paper]

[News]

- 11/30/2021: Kudos to SMILE PhD Peng

Liu on winning the UNIQUE-IVADO Award for Best Abstract

(Undergraduate and Graduate Level) at Montreal AI&

Neuroscience (MAIN) Conference! [Video] [News]

- 11/03/2021: Our lab's new AI paper

entitled "Unraveling Somatotopic Organization in the Human Brain

using Machine Learning and Adaptive Supervoxel-based

Parcellations" is accepted by NeuroImage today! Kudos to Kyle

See as a leading first author! [Link]

- 10/29/2021: Our lab's new paper on AI

in domain adaptation entitled "CADA: Multi-scale Collaborative

Adversarial Domain Adaptation for Unsupervised Optic Disc and

Cup Segmentation" is published in NeuroComputing today! Kudos to

Peng and Charlie! [Link]

- 10/28/2021: Congratulations to SMILE

Lab member Peng Liu on successfully defending his Ph.D.

dissertation entitled "Biology and Neuroscience-Inspired Deep

Learning"! Congrats, Dr. Liu!

- 10/28/2021: New collaborative paper

on "Machine Learning for Physics-Informed Generation of

Dispersed Multiphase Flow Using Generative Adversarial Networks"

published in Theoretical and Computational Fluid Dynamics! [Link]

- 10/22/2021: New publication on AI in

computational fluid dynamics entitled "Rotational and

Reflectional Equivariant Convolutional Neural Network for

data-limited applications: Multiphase Flow demonstration" in the

Journal of Physics of Fluids is now online! [Link]

- 10/09/2021: Dr. Fang will serve as

session chair for Optical Imaging Session of the Annual Meeting of Biomedical Engineering

Society (BMES) on Oct. 6-9, 2021 at Orlando, FL.

- 09/30/2021: A new collaborative grant

entitled "Creation of an intelligent alert to improve efficacy &

patient safety in real time during fluoroscopic guided lumbar

transforaminal epidural steroid injection" is funded by the I.

Heermann Anesthesia Foundation.

- 09/30/2021: Dr. Fang has received an

Oracle Research Project Award on "Explainable artificial

intelligence for Alzheimer’s Disease Assessment from Retinal

Imaging".

- 09/29/2021: Dr. Fang serves as the

session chair of Image Reconstruction Session at MICCAI

2021.

- 09/21/2021: A new 5-year U24 grant

($2.5M) entitled "Southern HIV and Alcohol Research Consortium

Biomedical Data Repository" is funded by NIH NIAAA!

- 09/10/2021: A new 5-year P01 grant

($6.6M) entitled "Interventions to improve alcohol-related

comorbidities along the gut-brain axis in persons with HIV

infection" is funded by NIH NIAAA! [News]

- 09/09/2021: A new 4-year SCH grant

($1.2M) entitled "Collaborative Research: SCH: Trustworthy and

Explainable AI for Neurodegenerative Diseases" is funded by

National Science Foundation! Dr. Fang is Co-PI on this

Trustworthy and Explanable Artificial Intelligence (AI) award.

[NSF] [News]

- 09/09/2021: A new 5-year P01 grant

($1.5M to UF) entitled "SHARE Program: Innovations in

Translational Behavioral Science to Improve Self-management of

HIV and Alcohol Reaching Emerging adults" is funded by NIH

NIAAA!

- 08/27/2021: Welcome two new Ph.D.

students Charlie Tran and Seowung Leem to join SMILE Lab!

- 08/26/2021: Three abstracts are

accepted by Society of Neuroscience (SfN) Annual

Meeting to be held November 8-11 virtual and November

13-16, 2021 in Chicago:

- Emergence of emotion selectivity in deep neural networks

trained to recognize visual objects

- A deep neural network model for emotion perception

- Machine learning defined precision tES for improving

cognitive function in older adults

Congrats to Peng and Alejandro!

- 08/24/2021: A new paper entitled

"Machine Learning for Physics-Informed Generation of Dispersed

Multiphase Flow Using Generative Adversarial Networks" is

accepted by Theoretical and Computational Fluid Dynamics!

- 08/23/2021: Welcome two new Ph.D.

students Charlie Tran and Seowung Leem to join SMILE Lab!

- 08/17/2021: Kudos to SMILE Lab PhD

student Charlie Tran who has been selected into the highly

competitive NextProf Pathfinder 2021 to be held

during October 17-19, 2021 on the campus of University of

Michigan, Ann Arbor, co-sponsored by the University of

California, San Diego. Congrats Charlie! [News]

- 08/11/2021: Congratulations to SMILE

lab undergraduate student Gianna Sweeting on being selected to

receive the John & Mittie Collins Engineering

Scholarship from Herbert Wertheim College of Engineering

(HWCOE)! [News]

- 07/30/2021: A new collaborative

5-Year R01 grant ($2.3M) entitled "Acquisition, extinction, and

recall of attention biases to threat: Computational modeling and

multimodal brain imaging" has been funded by NIH NIMH!

- 07/15/2021: Our AI research on

precision dosing for preventing dementia has been reported by UF News, WPLG Local 10 News (ABC-affiliated local

TV), WCJB TV (ABC/CW+ affiliated local), and

The Alligator.

- 06/14/2021: Dr. Fang presented as an

invited speaker at the Cleveland Clinic-National Science

Foundation Workshop "The Present and the Future of Artificial

Intelligence in Biomedical Research".

- 06/04/2021: NeuroAI T32 Machine

Learning Workshop taught by Dr. Fang has successfully completed!

[News]

- 05/14/2021: New 4-Year MPI (Fang &

Woods) RF1/R01 grant ($2.9M) on Artificial Intelligence for

transcranial direct current stimulation (tDCS) in remediating

cognitive aging has been funded by NIH! [News]

- 05/02/2021: Congrats to SMILE PhD

student Skylar Stolte on passing her Doctoral Comprehensive

Exam!

- 04/07/2021: Congratulations to SMILE

undergraduate student and incoming Ph.D. student Charlie Tran on

receiving the McNaire Graduate Assistantship!

- 03/18/2021: New 5-year ($5M) NIH U01

grant on New AI tool to improve diagnosis of Parkinson’s and

related disorders. [News]

- 03/01/2021: Congratulations to SMILer

undergraduate Gianna Sweeting for being selected by the

Univerity Scholar Program!

- 02/26/2021: Dr. Fang's work AI in

Parkinson's Disease dignosis via eye scans has been reported by

The Washington Post.

- 02/25/2021: Congratulations to SMILer

undergraduate Gianna Sweeting for being selected as a Fernandez

Family Scholar by the Herbert Wertheim College of Engineering

for her excellent progress and interest in research and graduate

studies. [News][Twitter]

- 02/18/2021: Dr. Fang has been

selected as Aspiring PI to attend 2021 NSF SCH PI Workshop: Smart Health in

the AI and COVID Era.

- 02/18/2021: SMILE lab PhD student

Peng Liu has been selected by NSF to attend the 2021 NSF SCH PI Workshop: Smart Health in

the AI and COVID Era.

- 02/2021: Dr. Fang will serve as Track

Chair for BMES 2021

- 02/2021: Dr. Fang will serve as

Program Committee for MICCAI 2021.

- 01/25/2021: Congrats to Kyle See and

Rachel Ho whose joint paper on "TL1 Team Approach to Predicting

Response to Spinal Cord Stimulation for Chronic Low Back Pain"

has been accepted by Translational

Science 2021!

- 12/2020: Dr. Fang, together with Dr. Mingzhou Ding and Dr. Andreas

Keil have been awarded UF

Research Artificial Intelligence Research Catalyst Fund

on the project "VCA-DNN: Neuroscience-Inspired Artificial

Intelligence for Visual Emotion Recognition"! [Link]

- 12/15/2020: Our paper on "Modular

machine learning for Alzheimer's disease classification from

retinal vasculature" has been accepted by Nature Scientific

Reports! [Link]

- 12/2020: Dr. Fang has been

interviewed by Forbes,

RSNA,

Diagnostic

Imaging on her research about AI

for Parkinson's Disease Diagnosis via Eye Exam.

- 11/2020: Dr. Fang is interviewed by

UFII on AI for brain functions. [Link]

- 10/30/2020: Paper published in Brain

Simulation on Machine

learning and individual variability in electric field

characteristics predict tDCS treatment response". [PDF]

- 10/14/2020: Congratulations to SMILer

Gianna Sweeting on receving the Engineering College Scholarship

from the Herbert Wertheim College of Engineering (HWCOE)! [News]

- 09/04/2020: Congratulations to BME

Alumna Yao Xiao Ph.D on receiving the prestigious 2020 BMES

Career Development Award! [News]

- 08/20/2020: Welcome new Ph.D. student

Skylar Stolte to join SMILE Lab!

- 08/05/2020: Congrats on Skylar Stolte

on her abstract entitled "Artificial Intelligence For

Characterizing Heart Failure In Cardiac Magnetic Resonance

Images" being accepted by American

Heart Association Scientific Sessions 2020!

- 07/16/2020: Our paper on

"Physiological wound assessment from coregistered and segmented

tissue hemoglobin maps" is published at Journal of the Optical

Society of America A. It is a collaborative work with Dr.

Anuradha Godavarty at Florida International University. [Paper]

- 07/06/2020: Congratulaions to SMILE

Member PhD student Peng Liu

on receving UFII

Graduate Student Fellowship on his project on

Neuroscience-Inspired Artificial Intelligence![News]

- 06/25/2020: Yao Xiao presented at the

2020 Annual Meeting of the Society for Imaging Informatics in

Medicine (SIIM) on 'Multi-Series CT Image Super-Resolution by

using Transfer Generative Adversarial Network'! [Link]

- 06/12/2020: SMILer Kyle B. See is

highlighted in BME Student Spotlight! [News]

- 05/30/2020: Congratulations to Skylar

Stolte for her paper, 'A Survey on Medical Image Analysis in

Diabetic Retinopathy', being accepted for publication in Medical

Image Analysis! [Link]

- 05/21/2020: Congratulations to Kyle

See for passing the PhD Departmental Comprehensive Exam!

- 05/04/2020: Garrett Fullerton and

Simon Kato have been selected for NSF REU.

- 04/05/2020: Yao Xiao presented at the

IEEE International Symposium on Biomedical Imaging (ISBI'20) on

'Transfer-GAN: Multimodal CT Image Super-Resolution via Transfer

Generative Adversarial Networks'! [Paper]

- 04/30/2020: Yao Xiao has been

selected as a Graduate Student Commencement Speaker at the

College of Engineering Graduation Ceremony. [News] [Speech

beings at 16:28]

- 04/07/2020: SMILer Charlie Tran has

been selected to participate in the University of Florida's

Ronald E. McNair Post-Baccalaureate Achievement Program. [News]

- 04/01/2020: SMILE Lab Alumnus Daniel

El Basha has been awarded the NSF Graduate Research Fellowship!

[News]

- 03/25/2020: Yao Xiao on successfully

defending her PhD dissertation!

- 02/25/2020: SMILer Garret Fullerton

has been accepted into UF University Scholars Program to work on

machine learning for medical image optimization. [News]

- 02/17/2020: Yao Xiao presented at the

SPIE Medical Imaging (SPIE MI'20) on 'Transfer generative

adversarial network for multimodal CT image super-resolution'!

[Talk]

- 02/07/2020: Congrats to Yao Xiao for

her abstract, 'Multi-Series CT Image Super-Resolution by using

Transfer Generative Adversarial Network', being accepted for

presenting at the 2020 Annual Meeting of the Society for Imaging

Informatics in Medicine (SIIM)! [Paper]

- 01/06/2020: Congrats to Yao Xiao for

her paper, 'Transfer-GAN: Multimodal CT Image Super-Resolution

via Transfer Generative Adversarial Networks', being accepted

for publication in the IEEE International Symposium on

Biomedical Imaging (ISBI'20), and awarded the ISBI (NIH, NIBIB,

NCI-funded) Student Travel Award, UF GSC Student Travel Award!

[Paper]

- 12/04/2019: Dr. Fang presented at the

Annual Conference of Radiological Society of North America

(RSNA) on “Multimodal CT Image Super-Resolution via Transfer

Generative Adversarial Network” with Ph.D. student Yao Xiao as

the leading author. [Link]

- 10/16/2019: Congrats to Yao Xiao for

her abstract, 'Transfer generative adversarial network for

multimodal CT image super-resolution', being accepted for

presenting at the SPIE Medical Imaging (SPIE MI'20)! [Paper]

- 09/09/2019: Dr. Fang receives an NSF

award entitled "III:Small: Modeling Multi-Level Connectivity of

Brain Dynamics". [News]

- 08/30/2019: Congrats to Peng Liu on

passing his dissertation qualification!

- 08/26/2019: Kudos to Peng Liu and Dr.

Fang for their first patent at UF. A Software Using a Genetic

Algorithm to Automatically Build Convolutional Neural Networks

for Medical Image Denoising! [News]

- 08/20/2019: Welcome new Ph.D. student

Kyle See to join SMILE Lab!

- 08/20/2019: Congrats to our STTP

students on their fantastic project presentations!

- 07/26/2019: 'Development and

Validation of Automated Imaging Differentiation in Parkinsonism:

A Multi-Site Machine Learning Study' accepted for publication in

The Lancet Digital Health. Congrats to SMiLE Lab and Dr. David

Vaillancourt!

- 08/20/2019: Two SSTP high school

students Imaan Randhawa and Aarushi Walia successfully completed

their summer research in SMiLE Lab under the supervision of Dr.

Fang and PhD student Yao Xiao.

- 06/28/2019: Dr. Fang recieves UF

Informatics Institute Seed Fund Grant for research in smartphone

based Diabetic Retinopath detection. [News]

- 06/13/2019: Kyle See is awarded an

NIH CTSI TL1 Predoctoral Fellowship to study methods and

mechanisms of cognition and motor control through data science.

[News]

- 05/10/2019: Dr. Fang receives

collaborative CTSI Pilot Award to study cancer therapy-induced

cardiotoxicity. [News]

- 05/07/2019: Congrats to Yao Xiao for

successfully defending her proposal

- 04/16/2019: Congrats to Peng Liu for

his paper ,'Deep Evolutionary Networks with Expedited Genetic

Algorithms for Medical Image Denoising', being accepted for

publication in Medical Image Analysis! [News]

- 04/16/2019: Kudo to Yao Xiao for her

paper, 'STIR-Net: Spatial-Temporal Image Restoration Net for

CTPerfusion Radiation Reduction', being accepted for publication

in Frontiers in Neurology, section Stroke. [News]

- 04/16/2019: SMiLE Lab's Senior Design

group presents their final project for Smartphone Based Diabetic

Retinopathy Diagnosis.

- 03/07/2019: Maximillian Diaz accepted

to UF University Scholars Program to work on retina based

Parkinson's diagnosis. [News]

- 01/10/2019: Dr. Fang named a senior

member of IEEE. [News]

- 05/17/2018: Dr. Fang awarded

University of Florida Informatics Institute and the Clinical and

Translational Science Institute (CTSI) pilot funding for

precision medicine. [News]

- 04/24/2018: SMiLE Lab recieves two

first place awards at the Diabetic Retinopathy Segmentation and

Grading Challenge. [News]

- 03/26/2018: Akash and Akshay Mathavan

accepted to UF University Scholars Program to work on

environmental risk factors for ALS. [News]

- 10/30/2017: Two master students,

Yangjunyi Li and Yun Liang, join SMILE Group. Welcome!

- 10/01/2017: Two UF BME senior

students Kyle See and Daniel El Basha join SMILE lab to work on

big biomedical data anlytics research supported by NSF REU.

Welcome! [News]

- 08/2017: Yao is awarded MICCAI 2017 Student

Travel Award. Congrats to Yao! [News]

- 08/2017: Four papers from SMILE Lab

will be presented at BMES

2017 in Phoenix, Arizona.

- 08/2017: SMILE Lab will move to J.

Crayton Pruitt Family Department of Biomedical Engineering at

University of Florida.

- 07/2017: One paper is accepted by Machine

Learning and Medical Imaging (MLMI) Workshop at MICCAI

2017. Congrats to Yao!

- 07/16/2017: Dr. Fang is invited to

give a talk at the 4th Medical Image Computing Seminar

(MICS) held at Shanghai Jiaotong University, Shanghai,

China.

- 06/2017: Yao won the NSF Travel Award

to attend CHASE 2017 in Philadelphia, PA. Congrats

to Yao! [News]

- 05/2017: Dr. Fang is invited by NSF

as a panelist of Smart and Connect Health.

- 05/2017: One paper is accepted by CHASE 2017. Congrats to Yao!

- 04/2017: One paper is accepted by MICCAI

2017. Congrats to Ling!

- 04/2017: Dr. Fang is invited to give

a talk at the Society for Brain Mapping and

Therapeutics Annual Meeting in Los Angeles, CA.

- 03/2017: Dr. Fang attended NSF Smart

and Connected Health PI Meeting held in Boston, MA.

- 10/2016: PhD student Maryamossadat

Aghili has been awarded travel grants from NIPS WiML 2016

Program Committee and FIU GPSC to attend WiML of NIPS 2016 in

Barcelona.

- 09/2016: Dr. Fang is invited by NIH

to review in BDMA study section.

- 09/2016: One paper is accepted by

Pattern Recognition!

- 08/2016: Dr. Fang is selected as an

Early Career Grant Reviewer by NIH.

- 08/2016: SMILE REU and RET students

and teachers won the 2016 Best REU and RET Poster Awards at SCIS

of FIU.

- 07/2016: Our paper "Abdominal Adipose

Tissues Extraction Using Multi-Scale Deep Neural Network" is

accepted by NeuroComputing!

- 04/2016: PhD student Maryamossadat

Aghili has been awarded travel grants to attend Grad Cohort

Conference (CRAW).

- 05/2016: Dr. Fang's research appeared

on FIU News: Professor uses computer science to reduce

patients' exposure to radiation from CT scans!

- 05/2016: Dr. Fang has been

selected to receive the 2016 Ralph E. Powe Junior Faculty

Enhancement Award from Oak Ridge Associated

Universities!

- 05/2016: Dr. Fang received funding

from NSF on CRII (Pre-CAREER) Award on "Characterizing, Modeling and Evaluating

Brain Dynamics"!

- 04/2016: Our paper "TENDER: TEnsor

Non-local Deconvolution Enabled Radiation Reduction in CT

Perfusion" is accepted by NeuroComputing!

- 04/09/2016: Dr. Fang is awarded

the first Robin Sidhi Memorial Young Scientist

Award from the Annual Congress of Society of Brain Mapping and

Therapeutics for recognizing her continuous devotion

to bridge information technology and health informatics.

- 03/2016: Our paper ""Computational Health Informatics in the Big

Data Age: A Survey" is accepted by the prestigous ACM

Computing Survey!

- 01/2016: Dr. Fang is invited by NSF

as a panel reviewer of Smart and Connect Health.

- 12/2015: Dr. Fang serves as the

Publicity Chair of the 14th IEEE

International Conference on Machine Learning and

Applications 2015.

- 04/2015: Dr. Fang is invited by NSF

as a panel reviewer of Smart and Connect Health.

|